My 2026 Investing Plan

In today's newsletter I cover,

- Portfolio Update (in USD)

- 2025 Stock Market Review & State of AI

- What type of stocks benefit the most from today's AI and stand to be the real big winners (Premium subscribers)

- My Investing Plan for 2026 (Premium subscribers)

Today's article is going to be slightly more technical than usual, so bear with me. But I think it is important I lay out all the facts in my head, so you don't miss out on the full context of my thought process behind my stock portfolio positioning. If you're unclear of anything, remember to ChatGPT/ Gemini it!

Portfolio Update

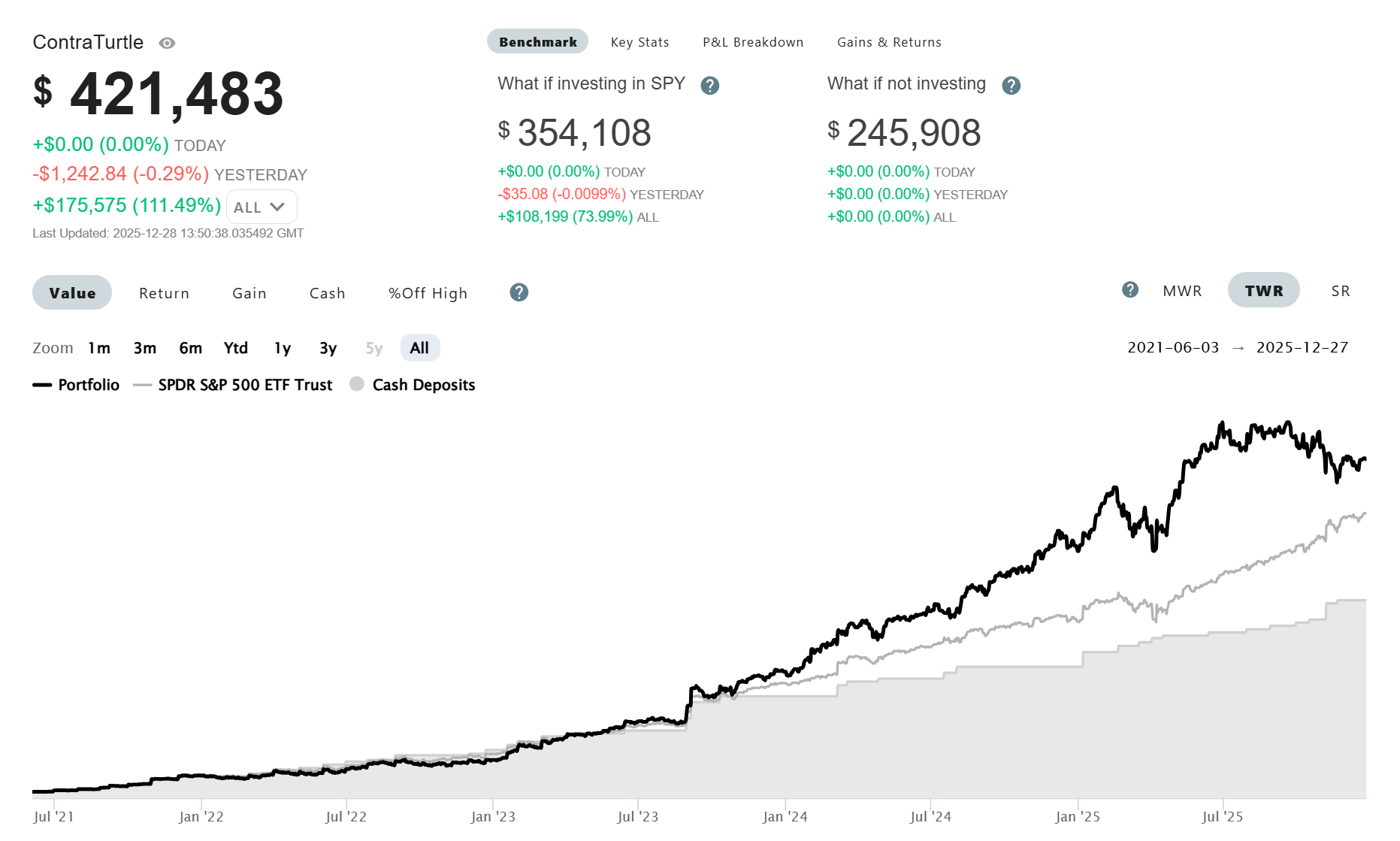

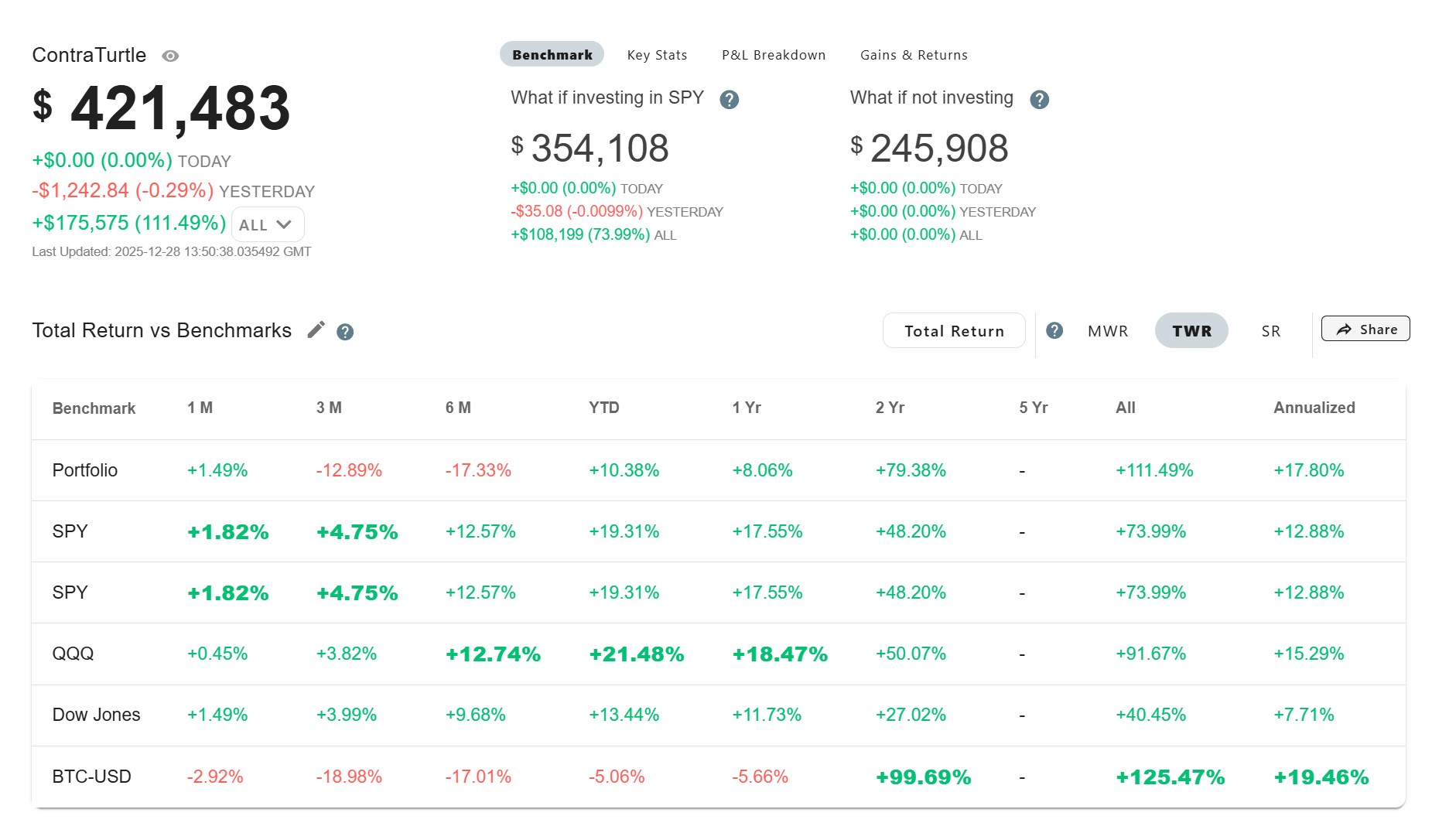

Over 4.5 years, the portfolio (17.8%) has outperformed the S&P500 by 5% on a Time-Weighted annualized return basis. Portfolio performance is never a straight line upwards, especially when my holdings differ so greatly from the index.

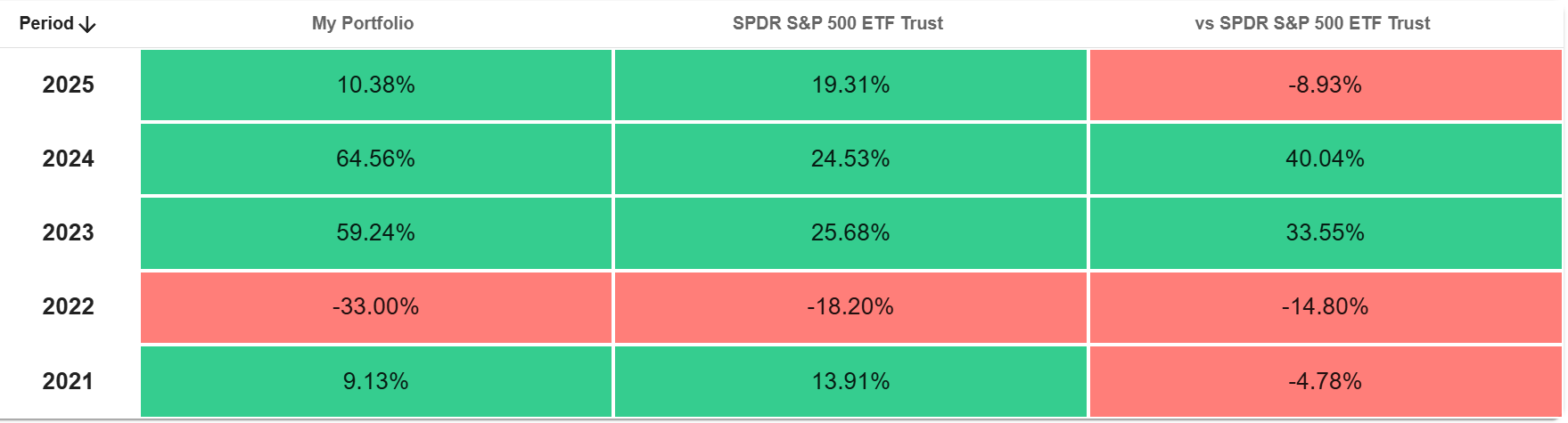

As a long-term investor, I expect periods of underperformance relative to the market in some years, such as 2022 and 2025 for myself, and outperformance in others, such as 2023 and 2024 (see table below). In fact, I tend to embrace underperforming years as they are usually opportunities to top-up on my high conviction stocks.

The key is for the strong years to more than compensate for the weaker ones, resulting in an annualized CAGR that exceeds the market over the long term (Beating the market on a 10 yr annualized Time Weighted Returns basis is what I'm gunning for to conclude that it isn't all just luck).

During periods of underperformance, the temptation to chase recent winners can be strong, particularly when comparing returns against peers or the broader market. In my view, successful long-term investing requires emotional resilience. One must resist FOMO and envy, and remain focused on one's portfolio thesis that he believes can beat the market on average over the long term. Failing to do so risks buying into periods of irrational exuberance or exiting positions precisely when they are most undervalued, often in businesses that were never deeply understood to begin with.

For example, it would be enticing to jump onto the bandwagon of simply buying Nvidia or Google at this point. I have deliberately refrained from doing so because these businesses either fall outside my circle of competence, as in the case of semiconductors, or still carry unresolved questions in my own assessment of the long-term business fundamentals in the age of AI, as with Google (Sounds like copium but I promise it isn't 🤡).

2025 Stock Market Review & State of AI

If I were to summarize 2025 in a single phrase, it would be the Year of Google’s Return. Year to date, among the Magnificent 7, Google delivered the strongest performance, with returns of ~65.5%.

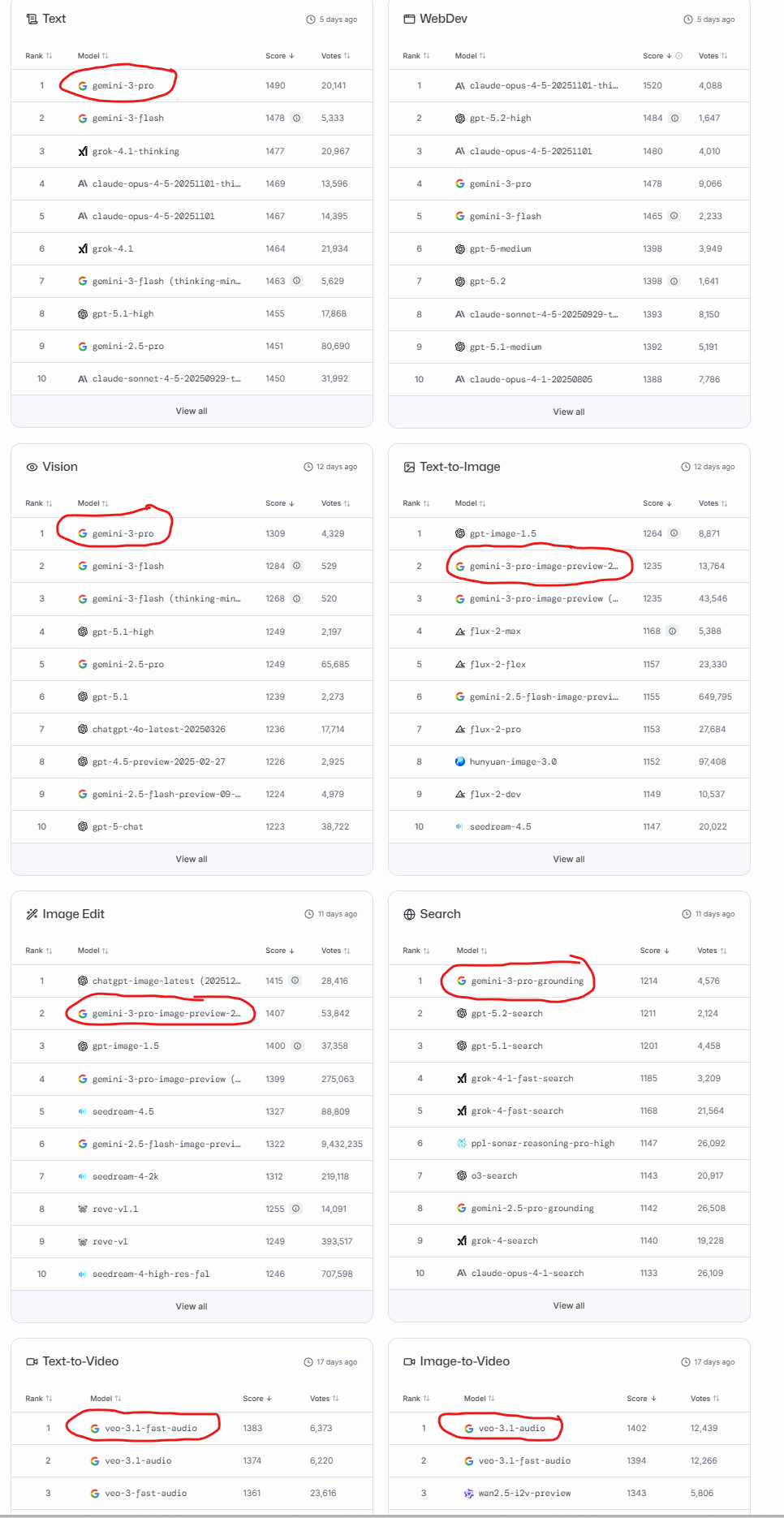

Once a 'laggard' in Generative AI, Google's Gemini models now top the charts across most use cases on lmarena's leaderboard, except coding which are usually led by Anthropic Claude or GPT models.

It is hard to ignore that in 2025, the market rewarded companies perceived to be leading in AI.

As of the 26 Dec 2025 close, the Magnificent Seven year-to-date returns are:

Google 65.5% (Gemini)

Nvidia 37.76% (Primary AI Infrastructure)

Tesla 25.29% (Grok)

MSFT 16.52% (OpenAI GPT Partnership)

Apple 12.12% (Apple Intelligence)

Meta 10.69% (Llama)

Amazon 5.59% (Nova)

For e.g., if we look at the bottom 3 Magnificent 7 performers of 2025 (Amazon, Meta and Apple), it's more than a coincidence that they are widely viewed as behind on the AI race. Even within Amazon, despite their deep Anthropic partnerships, what I'm hearing from internal sources (my cousin's been in AWS for the past 8 years) is that even Amazon workers themselves feel the company is behind on AI.

A tempting simplification is: “Whoever leads in AI deserves a higher stock price. Whoever falls behind deserves a lower one.”

The underlying intuition is that the company that achieves Super-intelligent AI could either:

- Unlock an “AI workforce” that automates knowledge work and replace human labor, driving massive operating leverage and margin expansion

- Create entirely new products, discover science and solve difficult enterprise problems to unlock new TAM

In both cases, the investment bet is the same: translating AI into materially higher profits.

This leads to two critical questions:

Q1) Are current AI developments actually on a path toward general superintelligence?

Q2) Do AI business models make economic sense once inference costs, competition, and commoditization are fully accounted for?

On the first question, my gut sense is no, at least not with the current style of scaling transformer-based AI models without fundamental architectural breakthroughs.

For non-technical readers, transformers are the core architecture behind Generative AI tools like OpenAI's ChatGPT/ Google's NanoBanana. In other words, I am broadly aligned with Yann Lecun's take that scaling up transformer models alone will not get us to Artificial General Intelligence (AGI).

There are 3 reasons why I believe Generative AI intelligence gains are plateauing:

1. “Capability Overhang” Signals Incremental Progress

In a recent interview, Sam Altman described AI as experiencing a “capability overhang”, suggesting today’s models are already good enough, but underutilized. Similar ideas have been echoed by Alexandr Wang and Satya Nadella. If frontier labs were confident that the next models (e.g. GPT-6 or equivalent) would be a genuine step-function leap toward superintelligence, underutilization today would not be a strategic concern. A truly transformative model tomorrow would see immediate, overwhelming demand. The focus on overhang suggests that frontier labs themselves expect incremental intelligence gains, not an exponential jump and hence, future improvements are unlikely to unlock significantly new economic use cases beyond what is already being tested in the market today.

2. Nvidia and the Shift from Pre-Training to Inference

Nvidia’s recent acquisition of Groq, an inference-focused ASIC company, is telling.

This signals a shift away from the belief that throwing ever more GPUs and data at pre-training will continue to unlock explosive intelligence gains. Groq’s architecture is optimized for low-latency, real-time inference in a world where model capabilities improve slowly or remain largely frozen.

General-purpose GPUs (like Nvidia's) excel when intelligence scales rapidly. Specialized inference silicon makes sense only when deployment efficiency, not raw intelligence gains, becomes the bottleneck.

3. Hallucination Has Not Disappeared

Anecdotally, at work and in my personal capacity, model hallucination (i.e. AI making up stories / giving inaccurate responses/ giving responses that deviate from the original prompt's intent) has not disappeared, even as Generative AI models continue to 'improve' over the last 3 years (e.g. from GPT 3.5 -> 4o -> 5 -> 5.1 -> 5.2). Theo t3 also talks about how benchmarks matter less and less and today's models still hallucinate despite 3 years since the release of GPT3.5.

Google's unit economics

On the 2nd question of AI business economics, the single biggest question I have on Google remains unanswered despite their recent AI leadership with Gemini - how will the company cross the rubicon of transitioning from a traditionally high-margin search company to a lower-margin answer engine company in the age of AI?

I know it is difficult to discuss the pessimistic case given Google's recent outperformance in 2025, but remember that a common trap is to let recent stock price drive the narrative, that is, concluding that “XYZ company is doing well fundamentally and will continue to do so” when, in reality, recent price appreciation can distort and bias fundamental analysis of the future.

Quoting Fawkes Capital Management's recent letter on Google's transition to a capital intensive company,

This year alone, Google will spend roughly $60 billion more in annualized capex than it did before ChatGPT launched. Since late 2022, the company has deployed an additional $85 billion in cumulative capex on AI-related development. With similar spending levels expected next year, Google’s capex now exceeds its net profit – a sharp departure from pre-AI years, when capex represented only about 25% of profit. In effect, a business once celebrated as a capital-light software platform is being pulled toward becoming a capital intensive infrastructure and construction company.

as well as Google's expected return for their investments into Gemini to win the Generative AI race,

What is Google receiving in return for this extraordinary level of investment? At present, Google processes roughly 1.4 quadrillion AI tokens per month. If we make a simplifying assumption and apply Google’s API input pricing across all of those tokens, the result is an additional $21 billion of annualized revenue. For context, this is not an especially compelling trade-off: $85 billion of incremental capex for $21 billion of low-margin revenue. In effect, Google is deploying vast sums of capital for what amounts to a modest 5% uplift in annual revenue, and materially lower returns than its core search and advertising franchise generates.

I am of the view that Google would have preferred a world where transformer-based Generative AI models like GPT never took the world by storm in the first place and the search engine business continued to chug along at high margins - an irony considering Google themselves invented this technology. But because of ChatGPT's release in 2022, Google was forced into a prisoner's dilemma involving a lower margin race - Invest aggressively or risk irrelevance, even if returns deteriorate.

In the next premium subscriber section, I cover,

- My next investment move related to Google given the above analysis

- Which companies actually benefit from today’s AI and stand to be the real big winners

- My Investing Plan for AI related companies going into 2026

My next investment move related to Google given the above analysis

On Google, I am...